ODI 12c Block the same load plan running again on a schedule when its still running from a previous scheduled run.

Stopping Load Plan from running multiple instances at one time

The Load Plan in ODI is a very handy functionality to orchestrate multiple scenarios in an orderly fashion. The only drawback with this functionality is there is no mechanism to stop multiple instances of the same load plan running at the same time.

This solution attempts to provide a generic approach that can be utilised with any load plan, more like a plug and play approach.

This solution provides additional functionality of populating a system status table that can be displayed on OBIEE dash board to provide visibility of Load Plan runs to end users. The solution also captures the parameters provided to the Load Plan and presents in the monitoring table

The following components are built and created as part of this solution

DB Objects –

WC_SYSTEM_STATUS_D – Database Table

WC_SYSTEM_STATUS_SEQ – Database Sequence

ODI Prodedures – Four ODI procedures are created. The code is given at the end of the document

- LP_START – Inserts row into the WC_SYSTEM_STATUS_D table and checks to see if any other instance of the same load plan is running and invokes LP_DOUBLE_RUN procedure

- LP_COMPLETE – To mark the load plan as successfully complete

- LP_DOUBLE_RUN – Updates the current run with status as A, Abort and results in an error, invoking the LP_ERROR

- LP_ERROR – Updates the current run with status F, Failure if not already aborted

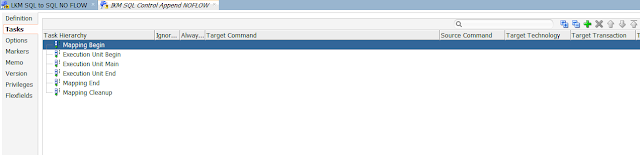

The steps to integrate these objects with any required load plan are

Create the first step of the load plan as scenario execution step LP_START

Create the last step of the load plan as scenario execution step LP_COMPLETE

Create two exception steps and add scenario steps as shown in the figure below

For the root step of the load plan provide the exception step as LP_ERROR and select the Exception Behaviour as ‘Run Exception and Raise’

For the LP_START step of the load plan provide the exception step as LP_DOUBLE_RUN and select the Exception Behaviour as ‘Run Exception and Raise’

That is it. You will never see the same load plan running multiple times at any time.

Here is the Code that needs to be pasted into 4 ODI Procedures that are integrated into the load plan.

Code

CREATE TABLE "WC_SYSTEM_STATUS_D"

( "JOB_ID" NUMBER NOT NULL ENABLE,

"JOB_NAME" VARCHAR2(1000 BYTE),

"START_TIMESTAMP" DATE,

"END_TIMESTAMP" DATE,

"STATUS_INDICATOR" VARCHAR2(10 BYTE),

"STATUS_DESCRIPTION" VARCHAR2(100 BYTE),

"PARAMS" VARCHAR2(100 BYTE),

"GUID" VARCHAR2(1000 BYTE)

) ;

CREATE SEQUENCE "WC_SYSTEM_STATUS_SEQ" MINVALUE 1 MAXVALUE 9999999999999999999999999999 INCREMENT BY 1 START WITH 50 CACHE 20 NOORDER NOCYCLE NOPARTITION ;

LP_START

declare

is_running varchar2(1) := 'C'; -- not running

CURSOR c_ind IS

SELECT status_indicator

from wc_system_status_d

where status_indicator = 'R'

and job_name = '<%=odiRef.getLoadPlanInstance("LOAD_PLAN_NAME")%>'

and guid <> '<%=odiRef.getLoadPlanInstance("BATCH_GUID")%>';

l_params varchar2(1000);

begin

begin

select LISTAGG(display_name,';') WITHIN GROUP(ORDER BY display_name) display_name

INTO l_params

from (

select t1.var_name||' - '||t1.var_value display_name

from crlodistg_odi_repo.snp_lpi_var t1

,(select * from (select i_lp_inst,load_plan_name

from crlodistg_odi_repo.snp_lpi_run

where load_plan_name = '<%=odiRef.getLoadPlanInstance("LOAD_PLAN_NAME")%>'

order by start_date desc) where rownum = 1) t2

where t1.i_lp_inst = t2.i_lp_inst

);

exception when others then null;

end;

INSERT INTO wc_system_status_d

VALUES

(wc_system_status_seq.nextval,'<%=odiRef.getLoadPlanInstance("LOAD_PLAN_NAME")%>',CURRENT_TIMESTAMP,NULL,'R','Running',l_params,'<%=odiRef.getLoadPlanInstance("BATCH_GUID")%>');

commit;

OPEN c_ind;

FETCH c_ind INTO is_running;

CLOSE c_ind;

IF NVL(is_running,'C') = 'R' THEN

select 1/0 INTO is_running from dual; -- Causing Error

END IF;

-- Intentionally not defined any exception handler here to push this task into error and hence raise the exception on the load plan which updates the batch as About and

-- stops the execution

end;

LP_COMPLETE

begin

UPDATE wc_system_status_d

SET end_timestamp = CURRENT_TIMESTAMP

,status_indicator = 'C'

,status_description = 'Complete'

WHERE guid = '<%=odiRef.getLoadPlanInstance("BATCH_GUID")%>';

end;

LP_DOUBLE_RUN

begin

UPDATE wc_system_status_d

SET end_timestamp = CURRENT_TIMESTAMP

,status_indicator = 'A'

,status_description = 'Abort. Currently another batch running'

WHERE guid = '<%=odiRef.getLoadPlanInstance("BATCH_GUID")%>';

end;

LP_ERROR

declare

is_running varchar2(1);

begin

select status_indicator into is_running from wc_system_status_d where guid = '<%=odiRef.getLoadPlanInstance("BATCH_GUID")%>';

if is_running = 'R' then

UPDATE wc_system_status_d

SET end_timestamp = CURRENT_TIMESTAMP

,status_indicator = 'F'

,status_description = 'Failed'

WHERE guid = '<%=odiRef.getLoadPlanInstance("BATCH_GUID")%>';

end if;

end;

Over and Out

DeliverBI Team